Plexus Corporation has played a key role in the development, manufacturing, and delivery of these Data Vortex validation products. This ITAR-compliant, American-based company is committed to excellence at all levels of the process from engineering through manufacturing. Working side by side our teams have made our differentiating designs a market-ready reality.

Legacy Data Vortex® Validation Systems Frequently Asked Questions (FAQs)

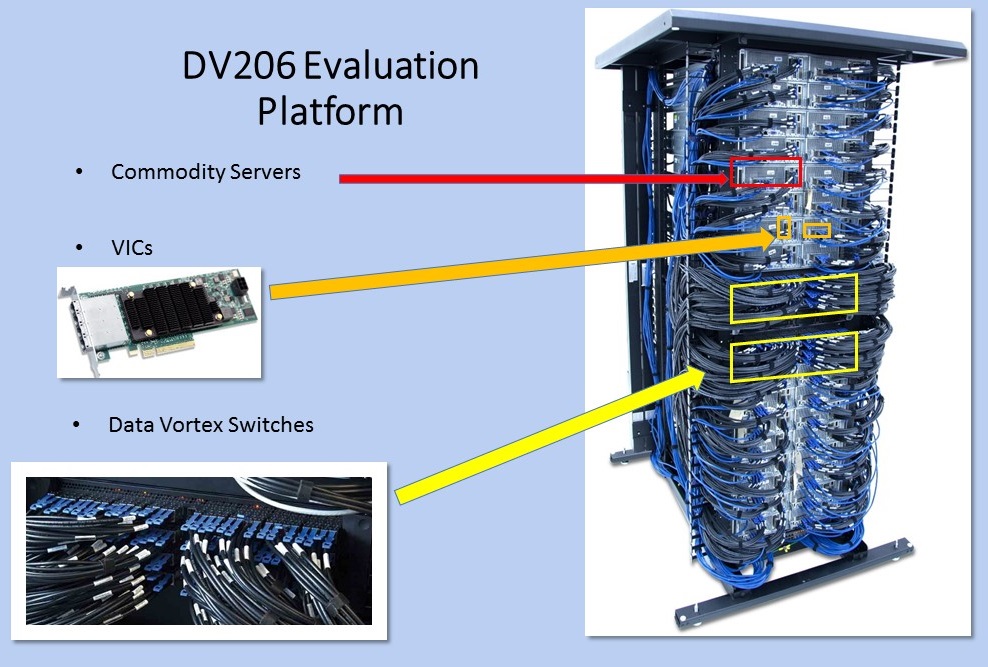

Q: Within the context of the original Data Vortex validation systems, what makes up a compute node?

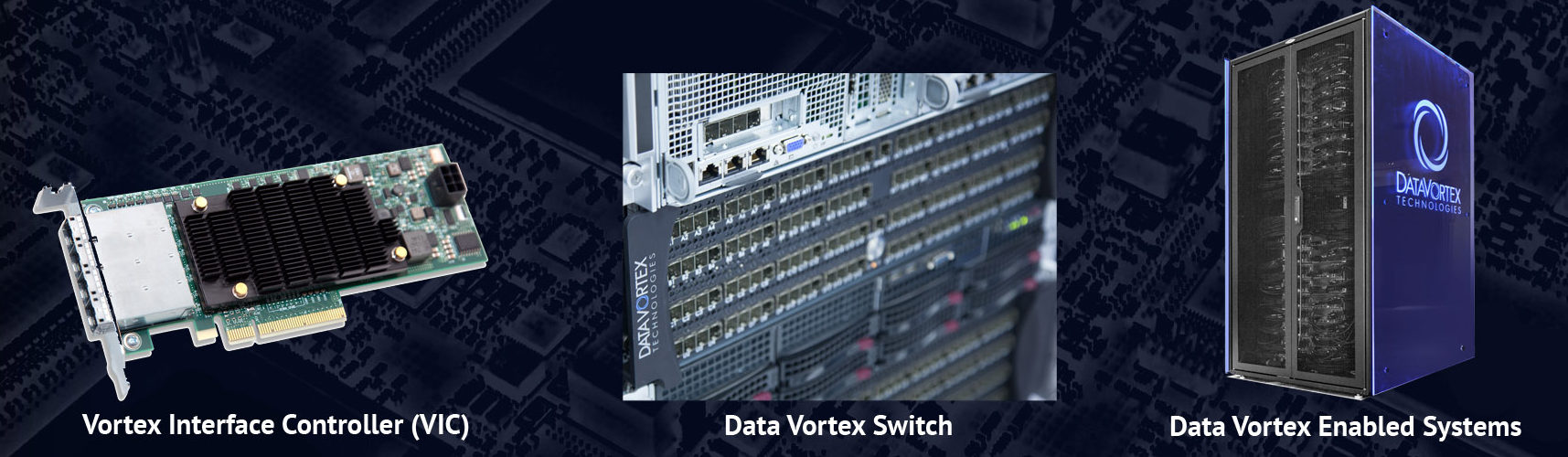

A: The compute nodes used in the legacy generation Data Vortex validation systems were Intel-based, Off the Shelf/commodity servers. Each server was populated with either one or two high-end Intel Xeon processors. Each processor had 128 GB of DDR DRAM for local application usages. Vortex Interface Cards (VICs) were installed into the servers so each CPU has its own network interface. Additionally, each compute node has an InfiniBand card to support porting legacy software that relies on InfiniBand technology.

Q: Was the original Data Vortex network chip set built with FPGAs or custom ASICs?

A: The first generation Data Vortex Switches and VICs are built with Altera Stratix V FPGAs. Next generation Data Vortex network chip sets will be built with Alter Stratix 10 FPGAs. Further and current generations have been built on newer AMD/Xilinx and Intel FPGAs. The future Data Vortex network design road-map is available under non-disclosure.

Q: How large could the legacy generation of Data Vortex computers be built?

A: In the legacy generation, 1-level systems were scalable up to 64 nodes (DV202 – DV206). 2-level systems were scalable up to 2,048 nodes (DV207-DV211). 3-level systems were scalable up to 65,653 nodes (DV212 – DV216). The Data Vortex 2-level switch has been designed and implemented in subsequent developments. Our 1-level vs. 2-level system performance comparisons run on our 2-level test bed platform show that as we grow levels and nodes, applications do NOT take a large performance hit as other systems do.

Q: Is there a Data Vortex Users Group?

A: Yes. The Inaugural Data Vortex Users Group was hosted by Pacific Northwest National Laboratory in September, 2017.

Q: What OS did legacy validation system run?

A: CentOS 7.2.1511

Q: What scheduler has been used?

A: Slurm 14.03.10

Q: How has the system been managed?

A: Cobbler & Ansible

Q: How has the user environment been managed?

A: Lmod, a Lua based module system.

Q: What languages have been supported?

A: C, OpenMP, pthreads

Q: Does the system support mixed mode programming?

A: Yes, software can be written to take advantage of both the Data Vortex network & legacy MPI over Infiniband

Q: What was the default compiler?

A: gcc 4.8.5